ML Project

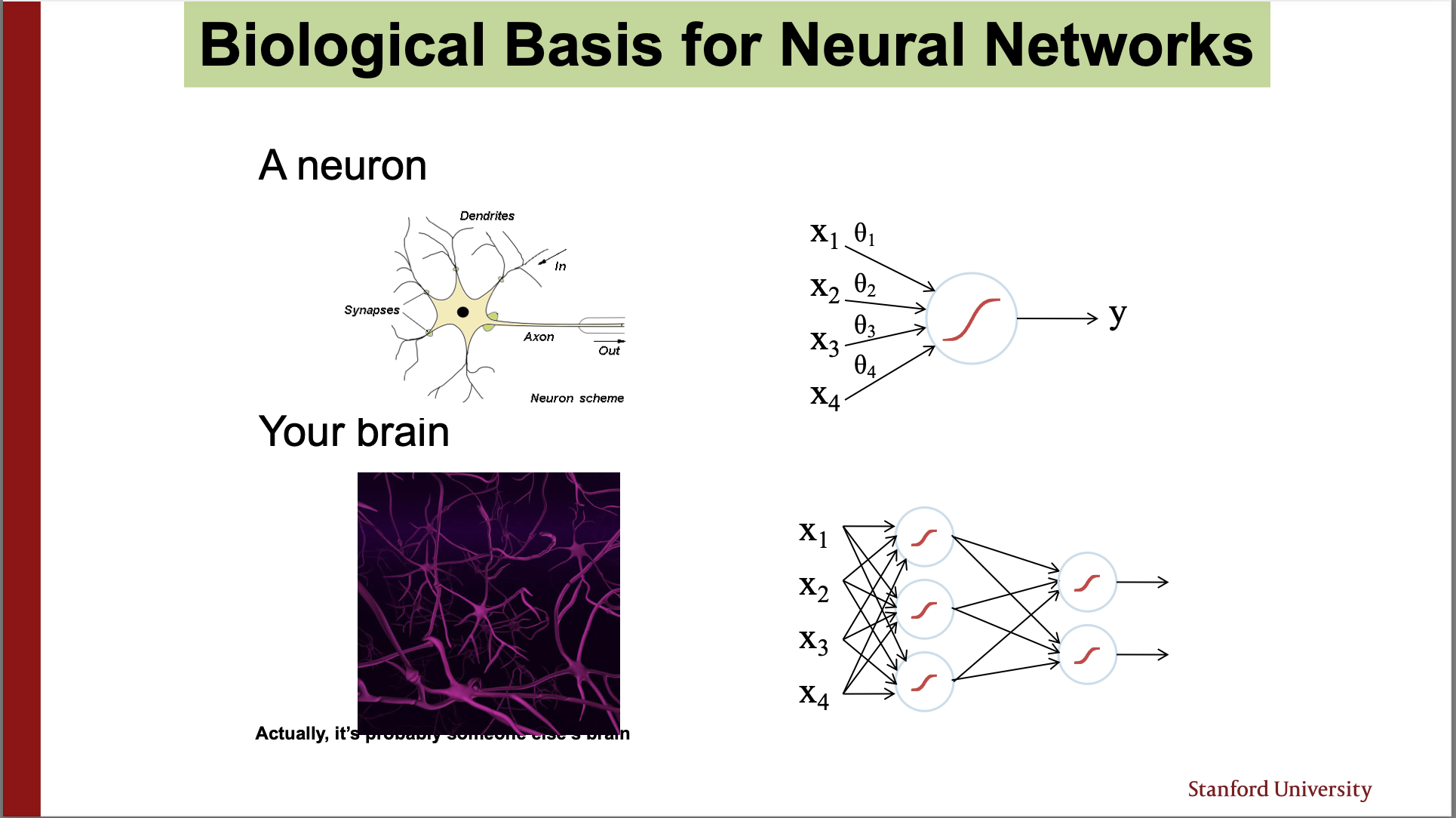

- Skills: Python, NumPy, SciPy, Neural Networks

- Class: CS 109: Probability for Computer Scientists

- Project date: May 2020

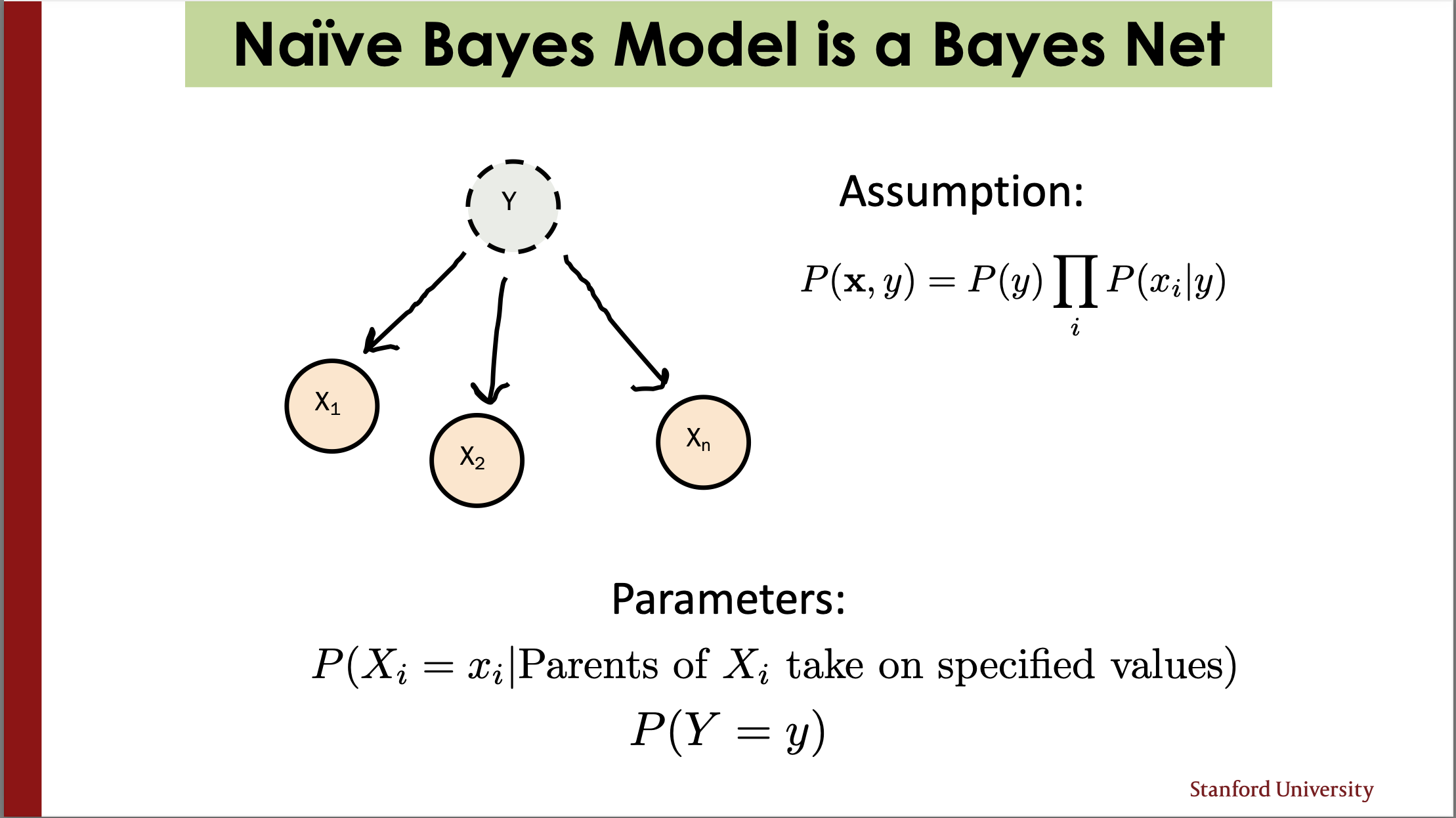

Building a machine learning classifier with Naive Bayes and Logistic Regression

As the final project in Stanford's core computer science probability course, we were tasked with building a machine learning model that can classify multiple datasets. An example of certain datasets are Netflix recommendations and disease finding: given x movies a user likes, will the user like movie y (Y/N) and given z symptoms that a user exhibits, does the user have a certain disease (Y/N).

I built this primarily in Python and used popular frameworks such as NumPy and SciPy to organize the data and run it through the model efficiently, alongside some high level matrix operations in the gradient descent step. We couldn't hard code any fixed parameters but rather had to have our model work on arbitrary datasets with varying number of features. A commonality was, however, that it is to be a classification boolean algorithm that returns Yes or No for a certain output feature y.